Roblox Moderation System Changes Impact 2026

In 2026, Roblox moderation system changes are reshaping how content and behavior are managed across the platform, and many players and developers have strong opinions about these updates. Roblox has always relied on a combination of automated filters, human moderation, and community reporting to keep the environment safe for users of all ages. As the platform continues to grow—in user numbers, complexity of creator content, and global reach—moderation systems must also evolve.

Recent adjustments to how content is reviewed, filtered, and enforced have sparked considerable discussion in the Roblox community. Some users feel the changes improve safety and reduce harmful content, while others argue they are too restrictive, inconsistent, or unclear in application. Developers who depend on moderation tools for their games, communities, and collaborations also feel the impact, for better or worse.

This blog post explores the recent Roblox moderation system changes, what’s been updated or removed, why these changes were introduced, and how they affect different parts of the Roblox community—from everyday players and parents to developers and group admins. Whether you’re a casual player, a seasoned creator, or someone managing a Roblox community, understanding these changes helps you interact more confidently with the platform’s evolving safety landscape.

What Is the Roblox Moderation System

Roblox’s moderation system governs what content is allowed, what behaviors are permitted, and how violations are handled. Its purpose is to protect users from inappropriate content, harassment, scams, and other harmful interactions, while still enabling creativity and free expression where appropriate. The system has multiple layers: automated filters, human moderators, user reporting tools, community standards enforcement, and appeals processes.

Key Components of Roblox Moderation

- Automated Filtering: Scans chat, usernames, place descriptions, models, and uploaded media to block inappropriate material.

- Human Review: Moderators review flagged content where automated filters can’t decide definitively.

- Community Reporting: Players and developers report violations from within games or via web tools.

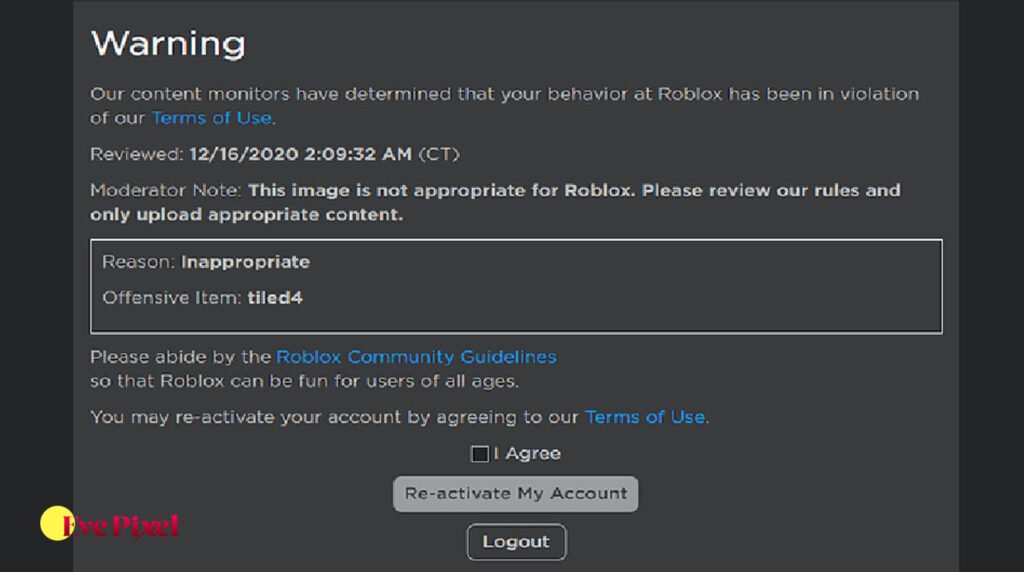

- Enforcement Actions: Warnings, removals, account restrictions, or permanent bans depending on violation severity.

- Appeals & Transparency: Processes where users can request reevaluation of moderation actions.

As Roblox’s user base grows older and more global, the moderation system must keep up with cultural differences, legal requirements (e.g., COPPA, GDPR), and new forms of abuse or exploitation.

What Changed in the 2026 Moderation Updates

In 2026, Roblox introduced several notable changes to its moderation system: strengthened content filtering, revised reporting workflows, risk-based restrictions, emphasis on context-aware moderation, and adjustments to appeal handling. These updates apply not only to chat text but also to uploaded assets, place descriptions, thumbnails, group titles, and in-game behavior. Below are the major shifts that matter most to players and creators:

1. Enhanced Automated Filters

Roblox refined its automated filtering with newer machine-learning models that aim to detect context, intent, and subtle patterns in text and media. This can reduce false negatives (harmful content that slips through) but also risks raising false positives (harmless content flagged incorrectly).

2. Stricter Asset Moderation Rules

Assets—including decals, audio, and models—are now subject to more thorough scanning. Even neutral or artistic content may be examined for context that older filters would have missed, resulting in more proactive removals when the system flags potential issues.

3. Reporting Workflow Overhaul

The in-game reporting user interface was redesigned to guide users through more specific categories and subcategories, helping moderators prioritize cases more efficiently. This means reports may take longer when flagged for deeper review, but priority issues are escalated faster.

4. Risk-Based Restrictions

High-risk accounts—determined through behavior signals, reports, or prior moderation actions—now face adaptive restrictions. These may include temporary limits on chat, asset uploads, or group management until the account is verified safe.

5. New Appeal Transparency Features

To address community criticism about black-box decisions, Roblox introduced more detailed appeal feedback. Users now see generalized reasoning for a moderation action (e.g., “Contextual violation: contains prohibited suggestion words”), though exact internal guidelines are still protected for safety integrity.

These moderation changes reflect Roblox’s effort to balance safety with creative freedom, but they also bring trade-offs and real impacts across the ecosystem.

Why Roblox Made These Moderation Changes

Understanding why these changes were introduced helps contextualize community reactions. Roblox’s moderation evolution in 2026 centers on three priorities:

A. Protecting Users Across Ages and Regions

Roblox hosts players from young children to adults across diverse cultures and languages. A moderation system designed around simple keyword blocking no longer suffices. New AI-assisted filtering helps interpret context, detect emerging harmful patterns, and address content that older systems could not.

B. Reducing Abuse and Exploitation

As Roblox’s economy expanded—especially with real money transactions, group monetization tools, and voice chat—moderation needed to address not only text chat but also visual and behavioral nerve centers where toxic or exploitative content might appear.

C. Aligning with Global Safety Standards

Roblox operates in many countries with varying legal requirements around data protection, child safety, and content regulation. The updated moderation system helps align community standards with international cultural and legal expectations, reducing risk of non-compliance.

While community sentiment is mixed, these motivations underscore why moderation evolves alongside platform growth.

How These Changes Impact Everyday Players

Players notice moderation impacts in several ways. Some feel safer and more comfortable in Roblox spaces; others find previously acceptable content is now blocked or removed unexpectedly. The most common player-facing effects include:

More Aggressive Filtering in Chat

Players report that playful jokes or slang are sometimes flagged because newer systems interpret them conservatively. While this reduces offensive content, it can feel restrictive to players used to informal language.

Asset Restrictions Appear Tighter

Thumbnails, decals, or audio that previously passed moderation are now removed or held for review. Players may need to alter creative content to align with new standards.

Harsher Reporting Outcomes for Repeat Offenders

Users who frequently receive reports—whether justified or not—may see escalating restrictions, including temporary chat limits or account review statuses.

Regional and Cultural Context Sensitivity

Some players notice that phrases harmless in one region may trigger filters in another, leading to confusion about inconsistent moderation decisions.

For many players, the overall intent of these changes—safer social spaces—is clear, but the learning curve and occasional over-filtering have sparked debate.

How Developers and Creators Are Affected

Roblox developers and group admins are particularly sensitive to moderation changes because their livelihood or community engagement depends on content visibility. Key impacts for creators include:

Asset Upload Review Delays

Larger games or marketplaces with many uploaded assets may experience slower approval or additional rejections. Creators must be more disciplined about complying with updated content guidelines.

Content Policy Ambiguity

Despite transparency improvements, some creators still find moderation guidelines unclear in edge cases—especially for satire, complex narratives, or spiritual and cultural content.

Increased Reporting and False Positives

Games with large user populations sometimes receive volume reports (mass reporting) by disgruntled players, triggering moderation flags that affect entire games or key assets.

Developer Reputation Signals

Roblox now integrates reputation metrics for developer accounts based on moderation history, content quality, and player feedback. High reputation unlocks fewer restrictions, while lower reputation increases scrutiny.

Appeal Workflows Are More Detailed but Cumbersome

While creators receive more context on moderation decisions, the appeals process takes longer when deeper review is required. Some see it as bureaucracy that slows creative iteration.

Developers adapting effectively now build moderation-compliance checks into their pipelines and educate their communities on acceptable content to avoid unnecessary removals.

Common Complaints and Community Response

Roblox moderation changes in 2026 have generated both praise and criticism across social platforms:

Players Say Moderation Is Too Restrictive

Some complain that lighthearted or community-specific slang gets filtered unnecessarily, hurting social interaction.

Creators Claim Inconsistent Enforcement

Because machine learning models and human moderation intersect differently across cases, some creators feel enforcement standards vary unpredictably.

Appeal Turnaround Times Can Be Slow

While transparency has improved, real feedback cycles are longer for complex content, frustrating creators and players waiting for resolution.

Over-Reporting and Mass Reporting Effects

Groups of players sometimes use reporting en masse to disrupt a creator’s game or assets, causing moderation flags that may be unjustified.

Despite these complaints, many players and developers also acknowledge the necessity of evolving moderation for safety, especially with voice chat, expanded global reach, and monetization tools.

How to Adapt to the New Moderation System

Whether you’re a player or a creator, adapting to moderation changes involves proactive steps:

1. Understand Updated Community Standards

Familiarize yourself with current Roblox community standards and guidelines. These are periodically revised to reflect moderation changes.

2. Be Clear and Intentional in Chat

Avoid slang or ambiguous phrases that could be misinterpreted by filters. Use clear, respectful language when possible.

3. Plan Assets With Compliance in Mind

Design thumbnails, models, audio, and text with guidelines in mind—avoid borderline content that might trigger automated flags.

4. Monitor Reputation Indicators

Developers should check moderation status dashboards or reputation signals that Roblox provides to anticipate restrictions.

5. Educate Your Community

Game owners and group leaders should inform their players about acceptable behavior and how reporting works to reduce false reports.

6. Use Appeals Thoughtfully

When appealing moderation decisions, provide context and clear reasoning. Detailed justification helps human reviewers understand intent.

By staying informed and proactive, players and developers can reduce friction from moderation changes while benefiting from increased safety.

Why Moderation Changes Matter for Roblox’s Future

As Roblox grows, maintaining a safe, inclusive, and legally compliant platform is essential—not only for children and families but also for creators who build real businesses and communities within Roblox. Moderation changes in 2026 reflect a broader trend in digital platforms: balancing free expression with safety, cultural nuance with automated enforcement, and creative freedom with community standards.

While criticism is natural when systems change, the goal of moderation evolution is to help Roblox thrive as a creative, socially rich, and globally accessible environment.

FAQ

Why is Roblox filtering content more strictly in 2026?

To improve safety, reduce harmful interactions, and comply with global policy requirements, using updated filtering and moderation logic.

Does moderation affect voice chat too?

Yes. Voice features are under similar scrutiny, and inappropriate audio behavior or reported communication contributes to account restrictions.

Can I appeal a moderation decision?

Yes. Roblox now provides clearer appeal options with context for why actions were taken.

Are false positives common now?

Some users report more false positives due to enhanced automated filters, but appeals help resolve them.

Do moderation changes affect game updates and releases?

Yes—uploaded assets and game descriptions are subject to stricter scanning before approval.

Is Roblox moderation transparent?

Transparency has improved, but exact internal rules remain protected for safety integrity.

Conclusion

The Roblox moderation system changes in 2026 represent a significant shift in how content and behavior are assessed across the platform. These updates aim to make Roblox safer, more inclusive, and better aligned with legal and cultural expectations worldwide. However, with increased filtering, stricter enforcement, and more complex appeal processes, many players and developers feel the impact in ways that require adaptation, education, and patience.

By understanding what changed, why it changed, and how to work constructively with the updated system, players and creators can continue to thrive within Roblox’s evolving ecosystem. Transparency, clear communication, proactive moderation compliance, and community education are key strategies for navigating this new landscape confidently and effectively.